Systems that use image data to guide user interactions when creating models already exist. However, the amount of time and effort required to get those inputs just right can make these systems cumbersome and inefficient. VideoTrace makes good progress in alleviating this problem by using computer vision techniques to obtain data that ultimately reduce the amount of user input needed.

Some techniques attempt to use images of the object to be modeled to automatically reconstruct the object's points in 3D. However, these methods tend to suffer from ambiguities in the image data itself, as well as problems with camera motion degeneracies and lack of distinct feature points found on the object's surface. In VideoTrace, a user instead sketches lines and curves over the object, allowing for an automatic reconstruction that interprets these 2D constructs and uses them in the 3D reconstruction process.

As you can see, VideoTrace melds the two worlds of user-guided and automatic reconstruction when creating a 3D model based on an object found in a video sequence. You shouldn't view it as a complete replacement for 3D modeling, but rather an assistant in the process.

How Does It Work?

A preprocessing stage must be completed before the user even looks at the video sequence. At this point, structure and motion analysis is performed. This means that a sparse set of 3D points are estimated, as well as parameters describing the camera that took the video, including such parameters as focal length. The freely available Voodoo Camera Tracker is used to calculate this. The 3D points computed will give three-dimensional context and meaning to the lines and curves that the user will draw in 2D.Also a part of the processing stage, pixels will be clustered together into what are called "superpixels" based on their color. Now each subsequent operation will work on these cells of pixels rather than the individual entities.

Now the user is ready to begin tracing the object they want a model of. First, they might use lines. These lines don't have to be drawn so accurately that they fit the object down to the pixel, since the superpixels will be considered in the refinement of the lines drawn. Sets of lines that form polygons will become one of the 3D object's faces.

As the user is drawing, they can flip to another frame of video and continue working there. The model computed so far from the first frame will be reprojected into the new frame. This means that even if the camera is looking at the object from a different viewpoint, the model drawn will still fit fairly well around it.

This is possible because the system is working in the background to compute the 3D shape and position of the model, continuously re-estimating the results based on new lines and curves drawn by the user.

Speaking of curves, the type of curves that can be drawn are called Nonuniform Rational B-Splines, or NURBS. The idea is that these 2D constructs can be drawn within the 3D space of the objects in the video. The estimation of the curves is both fast enough to be real-time for interactivity, and flexible enough to handle ambiguity. These curves can be constrained into a plane if necessary, as well.

From these curves, the user can create extrusions. The curves are dragged, and the vector representing the direction of movement is the normal of the plane the curve lies in. With multiple curves, surfaces can be generated in the final reconstruction, where the object points that are available from the preprocessing stage can be used to help fit the surfaces correctly to the actual object.

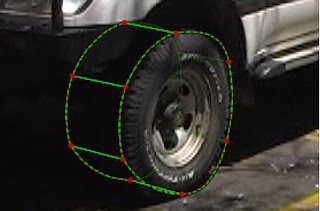

(Image from SIGGRAPH article)

(Image from SIGGRAPH article)Finally, because not all objects are visible on the screen at one time, the user can be limited in what they are able to trace. Luckily, there is a mechanism that helps alleviate that problem: the user can copy and mirror a set of surfaces about a particular plane.

Conclusion

As mentioned before, VideoTrace is not intentioned to replace other modeling software. Some problems may occur using it that need to be touched up later on. For example, because each surface is estimated individually, the global properties of the structure (regularity and symmetry for instance) may not be captured.Nonetheless, when you have a look at the videos (larger or smaller) of VideoTrace in action, I think you will agree how impressive the results can be. There is no doubt that the video game and animation industries should be very interested in such a package to help speed up their modeling pipelines.

0 comments:

Post a Comment

Comments are moderated - please be patient while I approve yours.

Note: Only a member of this blog may post a comment.